We’ll try to do this without hype because Russ Taylor and Rich Superfine and Sean Washburn tell us that hype doesn’t help. So we are not going to promise you a time when you will stroll around in an airborne cloud of teeny-tiny machines all invisibly whirring in tune with your needs. We are not going to promise you much of anything at all. Except a story.

So here goes.

From this point forward, please think small. Virus, molecule, atom. That small. Not so many years ago, life on that scale was fuzzy and remote, like faraway stars. A scientist could see these tiny things, dimly, but the rest of us took them on faith.

Not any more. Let’s say you have a son or daughter at Orange High School in Hillsborough, North Carolina. Your teenager may have sat down at a computer there, fired up an Internet link to the physics and astronomy department in Chapel Hill, goosed a robotic arm into gear, and whacked, shoved, mashed or otherwise bullied a virus. Yes, a teenager can actually do this.

There is a long word for this kind of shoving and mashing and whacking: Nanomanipulation. Nano means, roughly, “a billionth.” A nano-meter is one billionth of a meter. So a nano-Manipulator is something that manipulates nano-size stuff.

This is the scale we’ll be talking about, so, from here on, please expect a bit of nano-this and nano-that.

A Jolt of the Juice

First, who are these nanoguys? About 18 years ago, when Russ Taylor was a teenager going home after school to hotrod his father’s computers, Rich Superfine (his real name) finished college and went to work as a technician in Bell Laboratories. Sean Washburn was working in an IBM research lab in Yorktown Heights. At that moment, in 1982, two scientists at IBM Zurich, Gerd Binnig and Henrich Rohrer, were inventing the scanning probe microscope. The invention struck like a bolt of lightning, and Superfine felt the juice. So did Sean Washburn.

To see why this invention was such a big deal, we need to talk hardware a minute. Think about an ordinary microscope. It uses reflected light magnified through lenses. This is okay for stuff as small as bacteria or cells. But a light wave is about 540 nanometers long, several hundred times longer than, say, a carbon atom. At that scale, ordinary microscopes go blind.

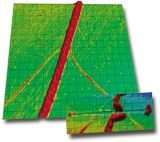

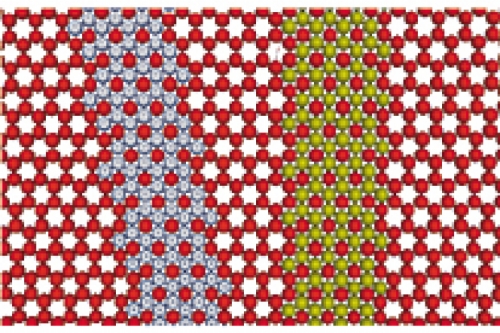

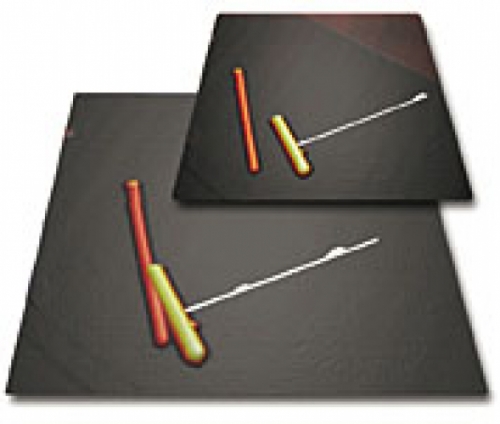

The scanning probe microscope was different. It used a tiny stylus mounted on a swinging arm, something like the tone arm of an old-fashioned record player except a great deal smaller and more precise. As the tip swept back and forth across a surface, gliding up and down over bumps and dips, the arm sent a stream of data to a computer. Like a blind man reading braille, the scanning probe microscope saw by touch, not by light.

At Bell Labs, scientists began aiming this new instrument at silicon, the stuff of microchips and the material on which the computer revolution would take its magic-carpet ride. “There was a particular surface of silicon that people did not understand,” Superfine recalls. “Then this new technique came along, and suddenly they were able to see the individual atoms sitting on the surface of the silicon. And so here was a controversy that had been raging for years, and it was like the proverbial elephant in the dark, with different scientists grabbing onto a leg, a trunk, an ear, and all arguing about the shape of the elephant. And then suddenly someone turns on the lights and you can see the whole elephant.”

But the elephant was sketchy, at first. When Superfine talks at conferences, he pulls out a copy of the research paper by Rohrer and Binnig. The paper contains a photo of their cardboard model, made with scissors and glue.

“Each time the microscope went over the surface, they recorded a line, and this line is a curve, in space,” Superfine explains. “So when you collect an image, what you’re doing is you’re putting a sequence of those curves together, to make a continuous picture. Well, they didn’t have the technology for putting those lines together to make a picture. So they took each one of those lines, they drew it onto cardboard, they cut out each line, then they glued them together, and they made a picture of this cardboard model, and that’s what they sent in with their paper-essentially the paper that won them the Nobel Prize.”

Nobody wanted to be fooling with cardboard and glue, so a few researchers began programming computers to take the probe data and generate pictures, with simulated shadows and glowing highlights. “Some of these images were beautiful,” Superfine recalls. “They had emotional impact.”

Hard Problems, Cool Tools

Jump to 1991. Rich Superfine has finished a Ph.D. and has landed in Carolina’s physics and astronomy department with a big-ticket item on his wish list: a new type of scanning probe microscope known as an atomic force microscope (AFM). He has never forgotten those years when the lights went on at Bell Labs. And he has never forgotten the pretty pictures. But he hasn’t yet met the people who will help him put the two together.

Meanwhile, Warren Robinett from computer science was getting together with a friend of his from graduate school, Stan Williams, who was a professor of chemistry at UCLA at the time. Williams was working with a scanning probe microscope, and Robinett had a background in virtual reality-the use of computers to simulate real environments. Together, they began combining virtual-reality techniques with microscopy. Fred Brooks, who was leading graphics projects in computer science, thought the work might be a good fit for one of his students, Russ Taylor. “This sounds like an interesting project,” Brooks said. “Why don’t we put Russ on it?”

To read between the lines here, you need to know about Fred Brooks.

Computer scientists, generally speaking, are not known for paying homage. By habit, they question authority. They value the new, the now, the next. They are not the sort of people who commission a lot of bronze busts. In the case of Fred Brooks, however, they have made an exception. They have set a bronze bust of Brooks in a fine, sunny room in Sitterson Hall, to remind themselves that this man, their colleague and elder statesman, is also their visionary leader. It was Brooks who had shown them that computers could help people see. It was Brooks who had insisted that computer science should serve something other than itself, that it should reach out to the natural sciences, to engineering and architecture-to the world-and find real problems to solve. And so when Brooks said, “This sounds like an interesting project,” people knew what he meant. He meant that the project would be hard, intellectually. It would reach way beyond the bounds of computer science. And it would be real.

That was just fine by Russ Taylor. Taylor was a tool smith and a problem solver who wanted hard problems and cool tools. Suddenly, here they were. So he went to work. He would need every scrap of what he’d learned: parallel computation (sort of like using several hammers to drive the same nail), graphics hardware acceleration, virtual environments, and distributed systems (computers at various locations working together). These were strengths of the department, and they happened to be precisely the strengths the project required. Taylor also needed a graphics computer. A fast one.

“At the time the nanoManipulator was developed,” Taylor says, “it could only have been done here because we needed to use Pixel Planes five, which was the world’s fastest graphics computer. There was only one, and it was here.” Fortunately, when Taylor looked around his department, he also found head-mounted displays for experiments in virtual reality, and a robotic arm used to dock simulated drugs into proteins. So a lot of the pieces he needed were ready and waiting. “Having all of that equipment here and all of that expertise here let me as a student just go do this,” Taylor says.

It wasn’t quite that simple, of course. The R&D took years.

Pictures from the Dark Side

As Taylor and his mentors in computer science made progress on the nanoManipulator, Rich Superfine began to realize that he needed it. “It became clear to us,” he says, “that the manipulations we were trying would be very difficult to do sitting at a keyboard, using keyboard commands to push a particle around.”

When Superfine met him, Taylor was working on an “interface,” the computer program that would use images to help people visualize nanoscale microscopy in a more natural, intuitive way.

“Originally I was skeptical,” Superfine says. “You know the easy comment is that it’s just a bunch of pretty pictures. But after working on this project for all these years I’ve, as they say, ‘come over to the dark side.’ I’m now a real proponent of pretty pictures.”

The pretty pictures did more than make the work appealing. Painting an image of the data took advantage of the human aptitude for rapidly seeing and interpreting patterns. This aptitude is a product of human evolution, Superfine says. In the wild, understanding at a glance the subtleties of a facial expression, an animal’s markings, or the lay of the land can spell the difference between death and survival.

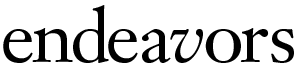

Computer scientists had been learning how to compute and render images of familiar kinds of spaces-the interiors of houses or offices or factories, for instance-realistically and rapidly enough that people could don a virtual-reality helmet and take a virtual tour. This was complicated aplenty, since a stroll through a virtual kitchen might require thousands of polygons (the geometric shapes from which virtual objects are assembled) and millions of computations to render. But here was a chance to draw and paint surfaces and objects so small that people hadn’t even seen them, whose dimensions and properties were still largely unknown. So the same system that would reveal the object’s properties would also have to render it on the screen. And all of this would have to happen immediately, in “real time,” because when you do experiments, it is often impractical to move something and then wait for a picture to see what happens next. As Sean Washburn puts it, “You have to respond on the fly.”

To engineer these attributes into the system, Washburn spent seven or eight hours with Russ Taylor every Tuesday for more than a year working on the nanoManip-ulator. The technical hurdles were enormous. Washburn, an expert in quantum transport (the behavior of electrons), had to work out the dynamics of how the tip interacted with various materials on the surface. He wanted the tip not only to record the surface, but to be able to move things around on it, as well. When the team added a “force-feedback” device, the scientists could feel, through changes in pressure from a joystick-like controller, the resistance of objects as the tip touched them.

Gradually, the system began to display the nanoworld as if lit by the sun, with highlights and shadows that conveyed texture, size, and shape. Strange new landscapes, full of rich colors and contours, appeared on the screen. The system made the work so vivid and immediate, it almost seemed like play. “It has a lot of the same things you see in fancy arcade games,” Washburn says.

Superfine says that this video-game quality helps sustain excitement about the work, especially for students.

“The experiments that have to get done these days at the highest level are very hard,” he says. “They take a lot of time and extended concentration. And it takes a lot of training and experience to really understand in a physical sense what the data mean. But if a student can sit down and see a picture, a representation of what’s happening on the surface, then that gets them excited. And that excitement helps us overcome some of the hurdles in training new scientists.”

But while graphics made it easier to sort information and recognize patterns, Superfine and Washburn had to keep reminding themselves that this gleaming new world on their computer screens was in large part illusion, a sort of visual fiction to help the brain arrive at something real. “The system is enormously powerful,” Washburn says, “so it’s easy to be seduced by the graphics, to get caught up in the way things look.”

For Russ Taylor, the test of the graphic interface was its usefulness. He avoided rendering extraneous detail, even if that meant painting data-sparse areas unnaturally flat and blank, like uncharted terrain.

From Slaughterhouse to Nanohouse

As the team beat a path between physics and computer science, the project attracted new attention and collaborators. By the mid 1990s, several dozen faculty members and students from various disciplines were part of the mix, struggling to learn how to talk to each other, to match up their interests.

This was not an easy way to work. Scientists typically prefer to immerse themselves in their science, to tune in to the music of their field and tune out the noise. But in the house of nanoscience, the team had to stay tuned to a lot of different channels. And they had to attend what Washburn calls “an infuriating number of meetings.”

Washburn, like the others, knew that the meetings were a necessary evil. The nano-Manipulator and the science around it were revolutionary. They were blurring academic boundaries, rewriting rules. There was no turning back.

“A lot of the stuff that you can do by yourself, sitting alone in a lab, has been done,” Superfine says. “The problems we’re dealing with today are so complex that there’s no single kind of training that will encompass them.”

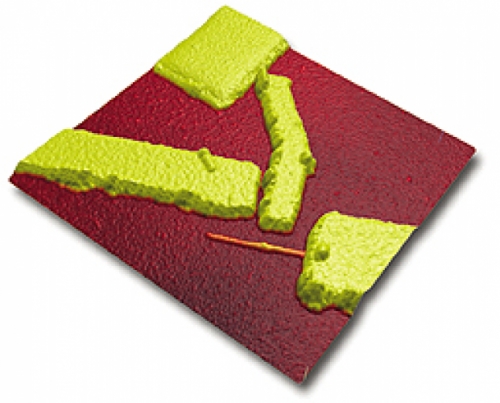

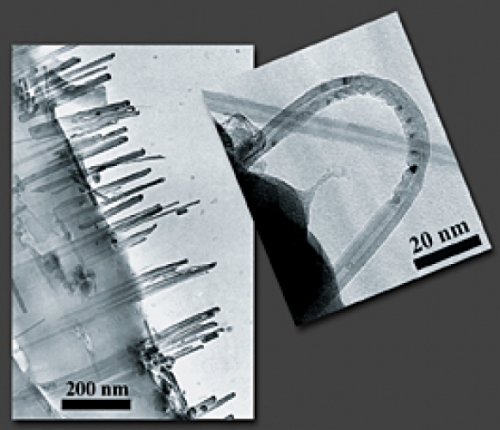

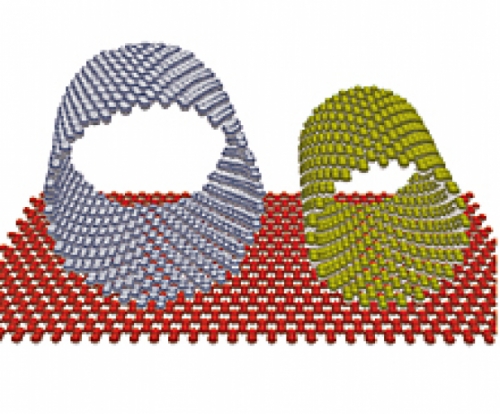

Consider, for example, the group’s recent work with motor proteins. Motor proteins are like tiny trolley cars. In cells, they run along a tangled network of microtubules, carrying cargo. To the nanoguys, this elegant little transportation system suggested a world of possibilities for doing big jobs in small ways. They planned to combine motor proteins with carbon nanotubes, for instance, to see if they could make electrical circuits much smaller than the computer-chip circuits of today.

“Think about all of the disciplines involved in such an enterprise,” Superfine says. “You get together in a meeting with biologists to talk about how to get some motor proteins, and they’re saying something like, ‘Yeah, we got a call from the slaughterhouse, and next Wednesday it’s time to go out there with some ice.’ You know, it really starts there. Because the biologists know how to get the cow brains and purify them to the point where we’re ready to try and stick the motor protein on a surface. But then we have to image it on a surface, so you need computer graphics. You have to hook the protein up to a nanotube-well, we don’t make nanotubes, but Otto Zhou down the hall knows how to make them-and then we have to hook electric leads up to it, and the electrical properties are pretty complex. Well, Sean Washburn has studied electrical properties in semiconductors for twenty years. So it’s all these different kinds of expertise being brought together to study problems that are way too complicated for any one discipline to understand.”

The Forest and the Trees

So people were learning each other’s languages, working each other’s problems. As a result, the team began to make some offbeat connections. Studies of the physical behavior of carbon nanotubes, for instance, seemed relevant to biology, as well.

“The nanotubes are basically molecule-size,” Superfine explains, “and the behavior you see from them is due to interactions among atoms. This is also true in biological systems. For example, the way DNA gets transcribed is that you have one molecule moving over a long strand of DNA. That takes a certain amount of energy. So in many ways the language of understanding the transcription process is very similar to thinking about a ball rolling on a surface. You think about friction and the energy cost of having one molecule move over the other.”

But thinking on this scale required adjustments. People accustomed to studying the forest didn’t always immediately see the point of looking at individual trees.

“For a long time, people measured the collective properties of a system,” Superfine says. “You looked at the behavior of millions of molecules, and you looked for the average behavior. If you’re studying viruses in gene therapy, for instance, you work with maybe a billion viruses in a test tube. You put in some chemical drops and you shake the tube, and then you measure what happened to those billion viruses on average. For us, we say, what’s up with each individual virus? Did each individual virus open up by itself to spit out the DNA?”

For the team, questions like this rush in from all sides. Researchers from across the country are linking to the nanoManipulator, using special Internet II connections engineered by Carolina computer scientists. And the labs have grown accustomed to a steady stream of visitors. The late Chancellor Michael Hooker, when he visited the lab, bent a nanotube that sprung back in an unexpected way, inspiring a new line of study.

“What we build is an instrument anyone can use,” Washburn says. “It attracts scientists with a bunch of different perspectives. So we get that excitement, that exchange of ideas.”

The excitement has helped to establish the project as a trendsetter. In a few busy weeks last winter, the researchers accepted an invitation to the White House, took part in America’s Millennial Celebration on the National Mall, and hooked up to a live experiment during “Future Visions,” a session broadcast from Washington by C-Span on New Year’s Day.

All of this has been good fun and great PR, the researchers say, but they would just as soon be spending the time doing science. Russ Taylor, weary of questions about how nanotechnology will change the world, takes great pains to deflate the rhetoric, comparing the nanoManipulator to a sandbox in which scientists push objects around or make marks in the sand. The technology, he says, still has a long way to go.

“A lot of people, when they talk about nanotechnology, are already at the flying stage,” Taylor says. “They’re saying, ‘When we have equipment that will assemble itself into whatever we need, how will that affect the world economy?’ Now, whoa.” He laughs. “Nanotechnology has taken on the role of being the next big thing. And it will do amazing things. But we don’t know what all of those amazing things are yet.”

Neil Caudle was the editor of Endeavors for fifteen years.

This research is funded by the National Center for Research Resources of the National Institutes of Health, the National Science Foundation, the Office of Naval Research, and the U.S. Army Research Office.